BACK TO RESEARCH WITH IMPACT: FNR HIGHLIGHTS

Deep learning has seen an explosive growth and as it is now being relied upon in areas such as healthcare, finance and autonomous vehicles, it plays an important role. To address the carbon footprint that comes with this growth, researchers are working to develop models addressing economic and environmental concerns.

Deep learning and the associated development and training of increasingly large models, also means a vast consumption of computational resources as well as energy. Therefore it has a big carbon footprint, due to the need to power the needed hardware. Deep learning is here to stay, but in order to ensure it develops sustainably, a need for efficient computational practices is essential.

“In recent years, the research community has made strides towards more efficient deep learning models and hardware optimisations. Techniques such as model pruning, quantisation, and knowledge distillation have enabled more efficient inference and training processes,” explains Karthick Panner Selvam, a Computer Scientist and PhD researcher at the University of Luxembourg’s SnT.

“Furthermore, the development of specialised hardware, like GPUs and TPUs, has significantly improved computational efficiency. These advancements have contributed to reducing the carbon footprint and computational costs associated with deep learning workloads. ”Karthick Panner Selvam Computer Scientist and PhD researcher at the University of Luxembourg’s SnT

Optimising hardware configurations

A remaining challenge is the optimisation of hardware configurations that efficiently match the specific needs of diverse deep learning workloads. This entails tackling the complexity of accurately predicting the performance of deep learning models on various hardware configurations, further complicated by a quickly evolving landscape of both deep learning models such as GPT, LLama, and Mistral, as well as hardware such as GPU, TPU, and GraphCore.

As part of his research, Karthick Panner Selvam is creating a tool to predict the best hardware setups for AI, helping to cut costs and carbon emissions for greener, smarter AI development and deployment.

“My research focuses on developing a predictive performance model for deep learning workloads, aiming to guide developers and researchers in selecting the most efficient hardware configurations. By forecasting training and inference characteristics, my performance model seeks to minimise computational costs and reduce carbon emissions, thereby addressing both economic and environmental concerns. ”Karthick Panner Selvam Computer Scientist and PhD researcher at the University of Luxembourg’s SnT

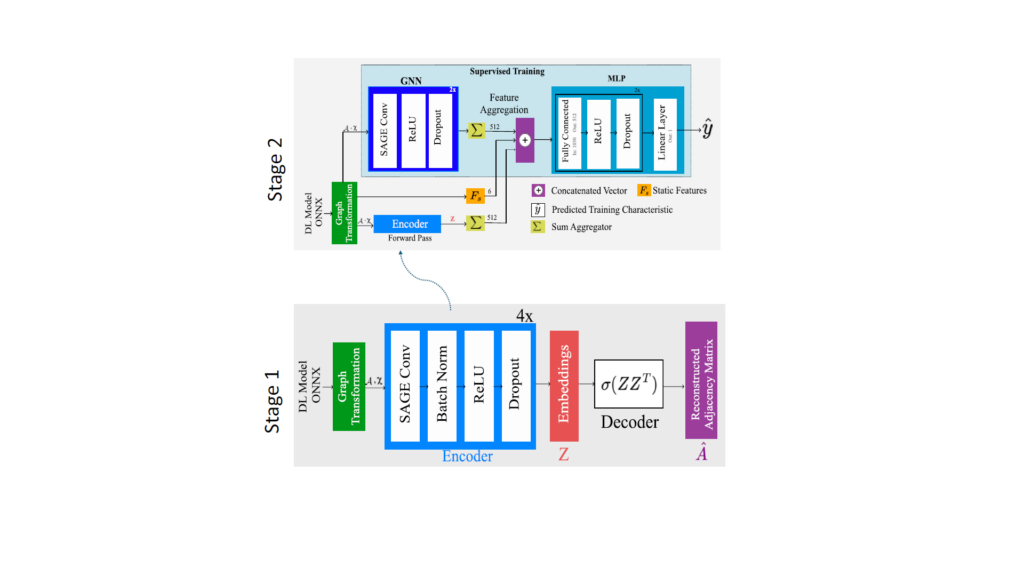

“I have developed a predictive performance model for inference workload using a graph neural network. The model was published in a reputed EuroPAR conference. I also improved the performance model for training characteristics with semi-supervised learning, and it was published in a top-tier NeurIPS Machine Learning System workshop.”

Selvam also developed performance predictive tools for large language models, also known as LLMs, using tree-based models.

Karthick Panner Selvam is a Computer Scientist and PhD researcher supervised by Mats Brorsson at the University of Luxembourg (SnT) in the SEDAN Group headed Radu State. He has collaborated with Google DeepMind and Google Research to develop performance models utilising multi-modal learning. He is currently collaborating with scientists from OpenAI and Meta to adapt large language models (LLMs) for optimising Triton kernels. His research has been presented at leading workshops, including ML For Systems @ NeurIPS 2023, WANT @ ICML 2024, and PhD Consortium @ KDD 2024.

MORE ABOUT KARTHICK PANNER SELVAM

What drives him as a scientist

“Passion drives me toward developing sustainable AI technologies. My choice of institution was influenced by its reputation for cutting-edge research in sustainable computing and its collaborative environment.”

What he loves about science

“I love that science and research offer endless possibilities for exploring, solving complex problems, and contributing to sustainable technological advancements.”

Where he sees himself in 5 years

“In five years, I see myself leading a research team dedicated to advancing sustainable AI, contributing to both academia and industry.”

Mentor with an impact

“My supervisor, Mats Brorsson, has played a crucial role in my professional development. His ability to ask thought-provoking questions has significantly impacted my research methods, fostering a culture of critical thinking and creativity. With his guidance, I have overcome challenging situations with clarity and confidence.”

Why he chose Luxembourg for his research

“I chose Luxembourg for my research because the University of Luxembourg is dedicated to high-quality and impactful research in a multicultural environment. The university is known for its emphasis on interdisciplinary collaboration and global perspectives, which fosters innovation across various fields. This unique setting enriches the research experience through cultural exchanges. It aligns with my project’s goals of making a positive societal impact. The university has a strong international reputation and a supportive research ecosystem that provides an ideal platform for groundbreaking work.”

On his work, peer to peer

“My research is focused on using graph neural networks (GNNs) and tree-based models to predict the computational performance of deep learning tasks on different hardware configurations. At first, I focused on inference workloads and employed GNNs to capture the complex dependencies within model architectures. This approach was further refined through semi-supervised learning to improve the accuracy of predictions for training characteristics. The culmination of this work has been the development of a tool that uses tree-based models to predict the performance of large language models. This showcases a novel application of these methodologies in optimising computational resources for deep learning.”

Related highlights

Spotlight on Young Researchers: Advancing ecosystem monitoring with remote sensing and innovative models

The health of terrestrial ecosystems is intricately linked to the sustainability and stability of society: forests, grasslands, and cropland, play…

Read more

Spotlight on Young Researchers: Unravelling the role of calcium signalling to overcome melanoma drug resistance

Cutaneous melanoma is the most serious type of skin cancer and the sixth most frequent cancer in Europe. Despite progress…

Read more

Spotlight on Young Researchers: Advancing sustainable land use

Industry, agriculture and waste disposal all cause soil pollution, a threat to both ecosystems and human health. Environmental scientists and…

Read more

Spotlight on Young Researchers: Combining machine learning & life events data to predict depression

A high number of people experience experiences depression in older age. Factors such as childhood conditions, adult life trajectories, and…

Read more

Spotlight on Young Researchers: Probiotics to the rescue

Billions of living microorganisms live in the human gut microbiome. Research has shown that an imbalanced microbiome plays a role…

Read more

Spotlight on Young Researchers: How multilingualism impacts learning numbers and mathematics

In an increasingly multicultural and multilingual society – especially in Luxembourg – it is key to ensure an educational system…

Read more