BACK TO RESEARCH WITH IMPACT: FNR HIGHLIGHTS

BACK TO RESEARCH WITH IMPACT: FNR HIGHLIGHTS

A mathematician develops new algorithms that allow the analysis of encrypted data without ever having to decipher it—a crucial point to ensure their confidentiality.

The development of contact tracing applications during the COVID-19 epidemic quickly split society in two. Some were willing to sacrifice privacy for the sake of public health, arguing in favour of an app capable of locating people. Others demanded limited, anonymous, GPS-free solutions to avoid the spectre of an omniscient government.

The ideal would have been to combine the two and have an app capable of locating people who have been close to the virus carriers while ensuring that the data was not legible by the authorities. However, this idea would require algorithms that could work on encrypted data, a task that is impossible to achieve to this day.

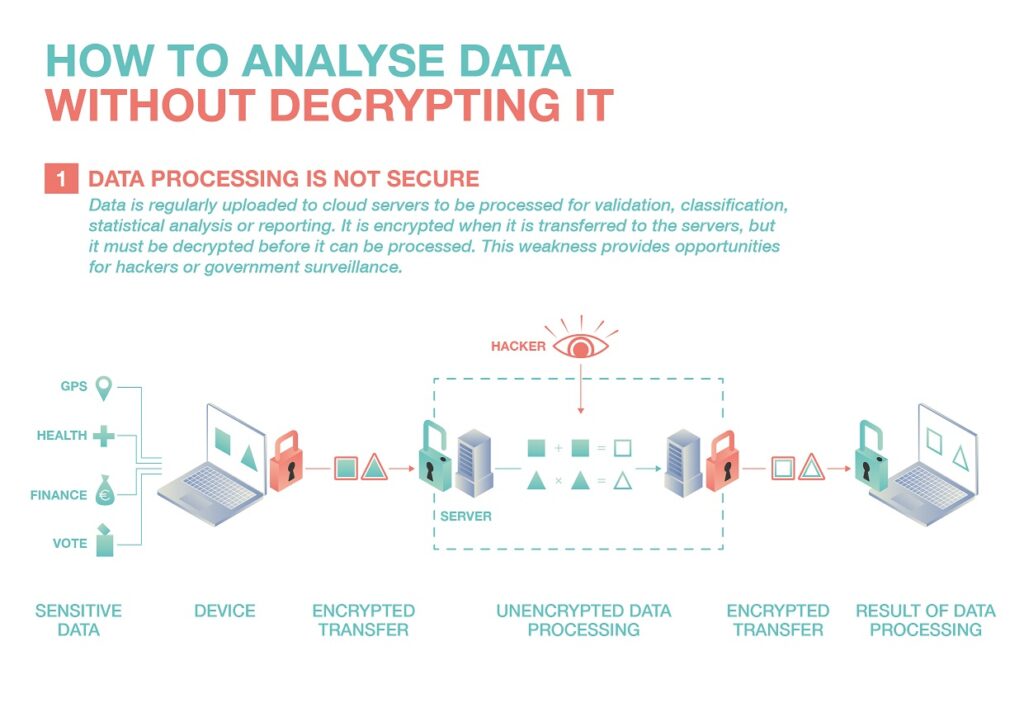

“This is one of the major weaknesses of current encryption systems,” explains Jean-Sébastien Coron, a mathematician at the University of Luxembourg. “Data is encrypted both during transmission and storage, which is good. But it has to be decrypted to be used in applications”.

The French-born researcher is developing techniques that ensure that sensitive data is not only encrypted when it is transferred over the Internet but also remains encrypted when it is analysed. They would thus remain unreadable from start to finish, from their storage on servers to their passage through computer centres. Since they would never be deciphered, they could not be interpreted by the authorities or be compromised by a hacker attack.

Such an assurance of confidentiality would be valuable for any process that uses confidential information: the analysis of sensitive medical or financial data, the search for criminals without compromising the location of innocent people, or the certification of electronic voting without revealing the identity of voters.

“M$#,8T>;[A5;=;pr!”

For now, running an analysis algorithm on encrypted data would give a completely false result. The reason: encrypted information is, by definition, unintelligible. It is for example impossible to check whether a mobile phone was near the geographical point 49.6229485 North, 6.1102483 West when the GPS coordinates of the mobile phone are encrypted as “M$#,8T>;[A5;=;pr!” or to translate the diagnosis “colonoscopy: ascending colon cancer” once transformed into “Xdy19!aja£+T”.

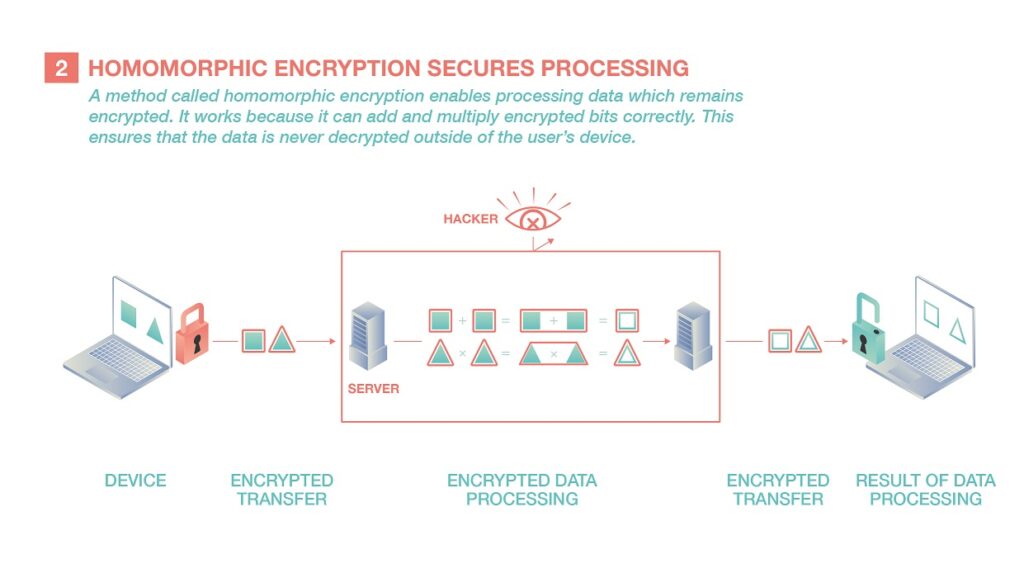

The situation changed in 2009. Craig Gentry, a computer researcher at Stanford University, revealed a method of encrypting data that allows data to be analysed without being deciphered. Thanks to it, adding and multiplying encrypted bits gives the same result as if one did it on decrypted bits. Since these two operations form the basis of all computer logic, any algorithm can be run on protected data. This is the promise of this technique called “fully homomorphic encryption”.

One terabyte per 100 words

However, this approach remains more theoretical than practical, as the encryption process is far too inefficient: it multiplies the length of messages by a billion. Encoding a paragraph of text (around a kilobyte) thus generates an encrypted message 100 million pages long (around a terabyte). This explosion in the size of the data drastically increases the computing power needed to analyse it, and a one-second calculation would then take several decades.

“It is a very nice approach, but one that remains unusable in practice for the moment,” says Jean-Sébastien Coron. “Its lack of effectiveness is directly linked to its simplicity: instead of using complex mathematical operations, it simply combines additions and multiplications. As these operations are very easy to reverse, ensuring a certain level of security requires the use of an absolutely huge encryption key”.

The mathematician is therefore developing more efficient algorithms and has already managed to divide the necessary resources by 10,000. “This is a very encouraging result. My approach is both fundamental and pragmatic. I take theoretical processes that already exist and look for tricks to improve them.”

Encrypting artificial intelligence

The researcher also uses such approaches to encrypt not only the data but also the computer programmes that analyse it. This technique would be useful when a company runs its online programs in the cloud on commercial servers, such as those of Amazon or Google. Indeed, cloud computing not only optimises the use of IT resources but also creates the danger that the code itself could be stolen in the event of a security breach. One of his former colleagues founded a startup to use this type of encryption to protect neural networks, a particular type of algorithm used in artificial intelligence.

Questions about data security posed by Big Data and artificial intelligence require answers at all levels, notes Jean-Sébastien Coron. “It is one thing to allow a web giant to access our information; it’s another to have it taken over without our consent by other private or state actors, as we saw in the NSA and Cambridge Analytica scandals.”

The European Union has strengthened protections in 2016 with the General Data Protection Regulation. However, this cannot avoid problems and abuses, as data will continue to be pirated, and we will continue to approve – without reading them – licence agreements every time a new app is installed. “In such a context, constantly keeping the data encrypted would provide superior security,” says the researcher.

Despite his highly theoretical work, the mathematician is no stranger to the world of innovation, to which he has contributed some 20 patents: “I worked for six years in industry in the field of SIM and smart card security. I continue to work in this very practical field. For me, it complements very well my fundamental work on homomorphic encryption”.

About the European Research Council (ERC)

The European Research Council, set up by the EU in 2007, is the premiere European funding organisation for excellent frontier research. Every year, it selects and funds the very best, creative researchers of any nationality and age, to run projects based in Europe. The ERC offers four core grant schemes: Starting, Consolidator, Advanced and Synergy Grants. With its additional Proof of Concept grant scheme, the ERC helps grantees to bridge the gap between grantees’ pioneering research and early phases of its commercialisation. https://erc.europa.eu

More features in this series

The ERC series is written by Daniel Saraga. The purpose of this ERC series is to showcase the high quality of research in Luxembourg, and how it is also attracting prestigious international funding.